⚡️ 1-Minute DISCO Download

In the digital age, the volume of data produced by companies and individuals as they go about their business is increasing exponentially. TAR reviews of the previous decade have come under strain. As data volume, complexity, and expectations have risen, a new champion has emerged: Generative AI (GenAI)-assisted review.

Document reviews carried out with the assistance of cutting-edge, purpose-built tools, such as DISCO Auto Review – a product I worked to develop in DISCO’s AI Lab — are now surpassing traditional TAR with recall rates consistently above 90%, greater versatility, and the ability to handle data types and languages that stymie traditional approaches.

In this article, I’ll explore the evolution of document review, from the rise of TAR to the new wave of GenAI-powered workflows, and dig into the critical differences that matter for modern legal teams. From ease of use, to accuracy, to explainability, to real-world adoption, the landscape is shifting fast. This article covers what’s changing, what’s working, and what’s next.

From linear review to TAR 2.0

Before AI became a buzzword in legal tech, document review was entirely manual. Attorneys and contract reviewers would comb through documents one by one — a linear review process that was time-consuming, expensive, and often inconsistent. As anyone who has done document review knows, there is variability in the way two reviewers might code the same document.

Even for a single reviewer, there are human factors that will affect coding consistency and quality: factors like how thorough or attentive or focused a reviewer is when a particular document is in front of them, and how much time they have spent developing their understanding of the matter. And now, factor in the increasing time and expense required to review larger and larger databases.

Enter TAR 1.0. This first wave of technology-assisted review applied supervised machine learning to the review process.

Enter TAR 1.0

TAR 1.0 is the first generation of technology-assisted document review.

With TAR 1.0, review teams would help train a machine-learning-based predictive coding model by coding statistically sampled training sets of documents. The performance of the model would then be evaluated on a separate set of documents (the “control set”), also human-labeled but not used to train the model. Additional sample sets of documents would be human-coded and added to the training set to improve the model until it reached predefined precision and recall levels as evaluated on the control set.

The model would then be run on the remaining documents in the database, and the documents labeled as responsive could then be produced or ranked by the model by their likelihood of being responsive to be further reviewed.

It wasn’t the fastest or the easiest method, but it was statistically sound. Courts accepted it. Review costs dropped. And a bit over a decade ago, it was at the cutting edge.

On-demand webinar: Reining in litigation costs with tech

But TAR 1.0 had limitations – the amount of up-front work, as well as the rigidity and lack of explainability. So, the industry moved on.

Meet TAR 2.0

TAR 2.0 is the modern evolution of TAR 1.0, which introduced continuous active learning (CAL) to allow the system to learn on the fly.

With TAR 2.0, after some initial training on a small “seed set” – rather than requiring large new sample sets of documents to be entirely coded before retraining the machine learning model – the model would adjust every time a new document (or a small number of documents) was coded.

Subsequent documents would be presented to the user based on the model’s predicted likelihood of relevance, thereby alleviating some of the burden of TAR 1.0. Thus, the system improved in near-real time, bit by bit. This made the process faster and more adaptable than TAR 1.0.

Yet even with these advances, both traditional TAR 1.0 and 2.0 are still missing some key pieces that attorneys want to see:

- Being able to tell the model what to do (which is more natural and often faster than showing it what to do with many examples) and

- Getting back an explanation from the model as to why the model coded a document as it did.

Purpose-built GenAI review tools fill these gaps.

Hitting the limits: Where TAR falls short

As revolutionary as TAR has been, it carries inherent limitations:

- Showing rather than telling

- Dependence on large amounts of training data upfront

- Lack of explainability

- Scalability

I’ll break these down below.

1. Showing rather than telling

With traditional TAR, review teams must show the system what’s relevant through repeated coding of training sets. This doesn’t align with how legal teams naturally work – i.e., writing tag definitions and review protocols in plain English.

2. Dependence on large amounts of training data

Traditional TAR workflows depend on showing the system hundreds or even thousands of coded examples before it can begin classifying documents effectively. That upfront training creates both a time burden and a resource challenge, especially on large matters.

3. Lack of explainability

Traditional TAR models assign relevance scores, but they don’t provide plain-English explanations for why a document was coded one way or another. Reviewers see rankings or scores, but not the reasoning behind them.

That lack of transparency makes it harder to audit decisions, identify blind spots, or adjust review strategy as new information emerges.

4. Scalability

Traditional TAR tools weren’t built for the messiness of modern data. Discovery now ranges from Slack threads and cloud-based attachments to emoji-heavy chats and ephemeral messages. Applying a trained model to these diverse, fragmented formats often requires additional preprocessing or manual review, eroding the very efficiency TAR promised.

In short, while traditional TAR was a powerful step forward, there is a better way that meets lawyers where they are. Legal teams aren’t just looking for tools that assist the review process. They need tools that they can understand, and that act as a force multiplier for a legal team’s understanding of a case.

Enter generative AI: A new chapter in ediscovery

The limitations of traditional TAR opened the door to a new kind of intelligence in legal review, one that works based on written instructions from lawyers.

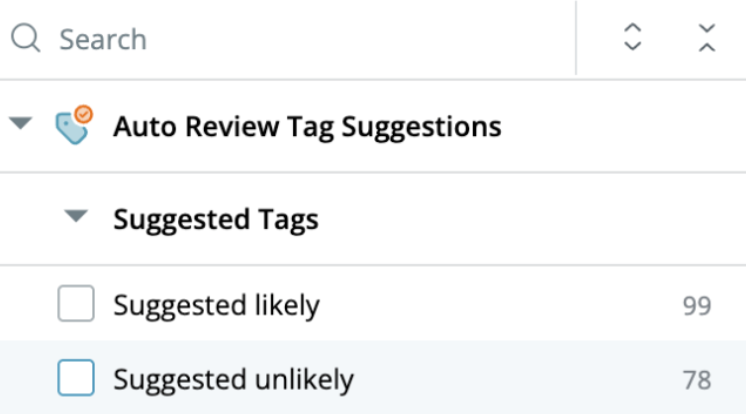

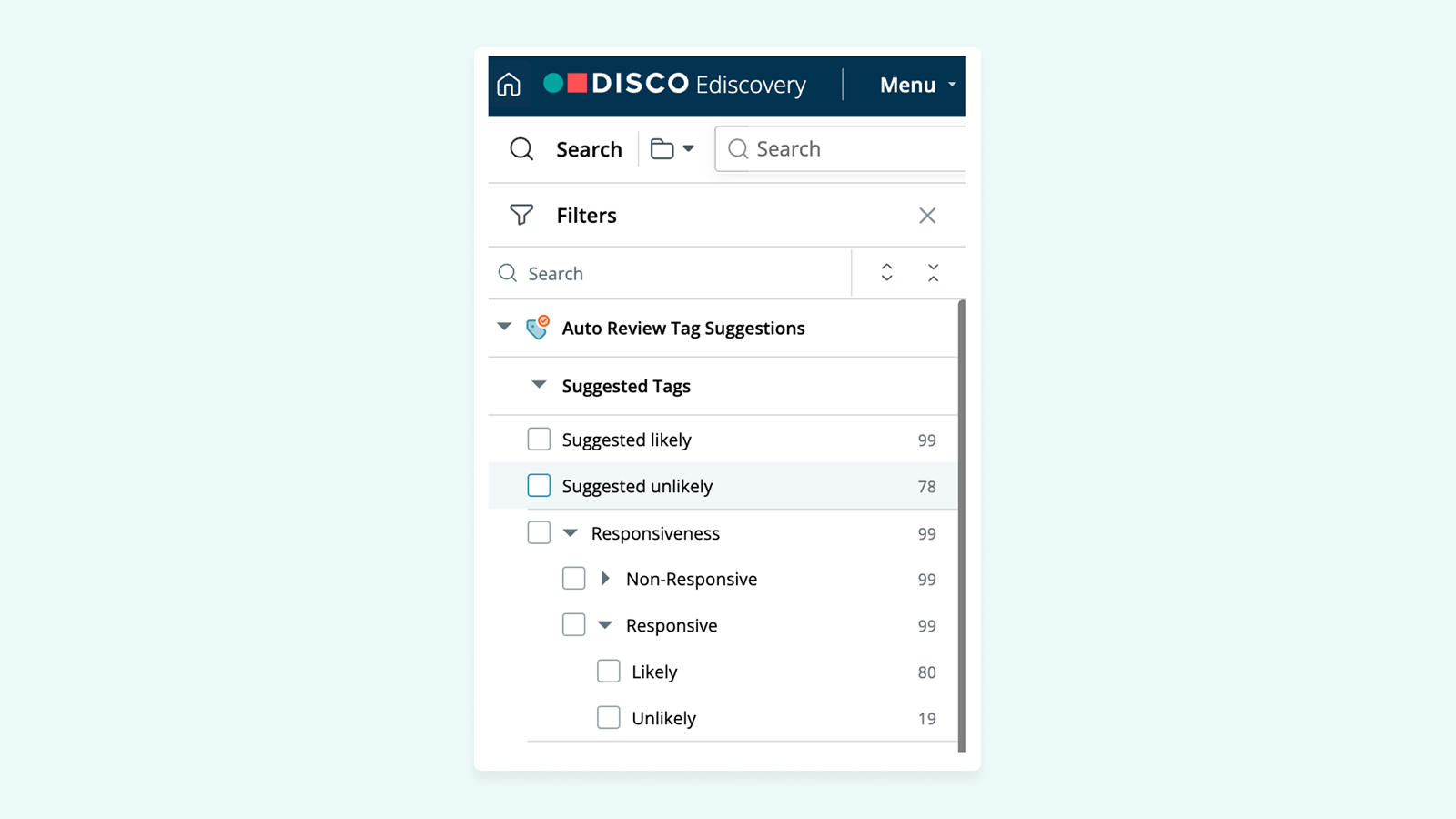

Generative AI marks a shift from rules-based learning to language-based reasoning. Instead of coding hundreds of documents to train a system, case teams can now define tag descriptions in plain English — much like drafting a review protocol — and immediately begin evaluating how well the system understands and applies those tags.

This early feedback loop is where GenAI shines. Reviewers can assess tagging suggestions in context and refine their instructions on the fly. That kind of adaptability is difficult to achieve with TAR alone.

And because GenAI is trained to work with language, not just metadata or coded examples, it can handle newer, less structured formats, like chat logs, Slack threads, and mixed-document sets, while providing a level of flexibility that predictive models typically struggle with.

That’s not to say GenAI replaces TAR. It doesn’t.

But it reframes the approach. Instead of asking how many documents you need to train a model, the questions become:

How clearly can you define your instructions?

and,

How quickly can you test and improve the system’s responses to your instructions?

For legal teams under pressure to move fast and keep their reviews defensible, that shift is more than technological. It’s strategic. And it’s natural. Attorneys are already experts at expressing themselves with precision.

On-demand webinar: How to talk to clients about emerging legal technologies

TAR vs. GenAI: Key differences that matter

The GenAI introduces a fundamentally different review experience from traditional TAR workflows. At a glance, both approaches aim to reduce manual burden and surface the most relevant documents quickly. But under the hood, they work and feel very different.

Let’s look at how they compare across a few key dimensions.

Accuracy & flexibility

TAR’s accuracy depends heavily on training data. Once a model has seen enough examples, it can deliver impressive consistency at scale, assuming the examples were accurate and comprehensive. But the front-loaded nature of this process can be a barrier.

You usually need hundreds or thousands of tagged documents to get started with TAR. And if your understanding of relevance evolves mid-review (as it often does), the model will need retraining.

By contrast, GenAI offers a more flexible approach. Teams can write tag definitions in plain English — the way they would for a protocol — and see how the system interprets that instruction immediately on a small set of documents. This allows for faster iteration and better alignment with evolving case strategy.

In short, GenAI offers a level of agility that TAR can’t.

Read more: How to Use Generative AI for Fact Analysis and Investigation

Transparency & explainability

TAR models can rank documents with a high, medium, or low relevance score, but they rarely offer an explanation as to why a document was ranked the way it was. For reviewers, that can lead to uncertainty. For courts, it can raise questions about defensibility.

With GenAI, instead of opaque scores, reviewers get narrative justifications for tagging decisions. Explanations describe in natural language how a tagging decision was made.

While this doesn’t eliminate the need for validation, it offers a level of transparency that helps build trust. Reviewers can understand the logic, audit it, and revise it, all in the same workflow.

Moreover, the coding suggestions and explanations provided by a GenAI model can help you identify potential blind spots or areas for improvement in a review protocol. This is not easy to do with traditional TAR.

Speed & efficiency

TAR is fast — once the model is trained. But that training phase takes time, and errors early in the process can compound downstream. There’s also overhead involved in validating the model, running QC workflows, and recalibrating as issues emerge.

GenAI reduces time to insight by making it easier to adopt and adapt. By allowing reviewers to interact with the system using natural language and then being able to see preliminary coding suggestions on small samples of documents right away, GenAI accelerates setup and minimizes friction. This streamlines the front end of review, supporting TAR’s bulk-classification power while making it easier to apply human judgment where it counts.

Workflow impacts: Rethinking review with GenAI

These factors give GenAI-assisted review the competitive edge:

No need for upfront training sets: Traditional TAR requires teams to invest significant time up front, coding hundreds or thousands of documents before meaningful review can begin. GenAI removes that barrier. By allowing teams to start with natural language instructions, review can begin immediately, accelerating early-phase work while still supporting statistical validation as the review progresses.

Fast iteration: In TAR, adjusting a review strategy often means retraining the model and revalidating performance, adding days or weeks to a project. With GenAI-assisted review, teams can refine tag instructions on the fly, adjusting definitions and protocols in real time. This makes it easier to adapt to evolving case strategies without disrupting the larger review effort.

Explainable output: One of the key challenges with TAR is its black-box nature: reviewers see relevance scores but not the reasoning behind them. GenAI changes that by justifying tagging suggestions in plain English, helping reviewers make informed decisions and making the process more transparent for clients, courts, and opposing parties.

Workflow compatibility: Teams don’t have to choose between TAR and GenAI. TAR provides the structured foundation. GenAI builds on it, adding explainability and faster protocol refinement without disrupting established workflows. The key is maintaining human oversight, clear documentation of review protocols, and statistical sampling plans, just as TAR workflows require. Done right, GenAI-assisted review upholds the same standards of reliability and auditability that courts have long accepted with TAR, while making the process more intuitive and adaptable for legal teams.

Faster onboarding for new team members: Getting new reviewers up to speed on a TAR-driven review can be time-consuming, especially when it comes to understanding model behavior and protocol nuances. GenAI lowers that barrier by allowing reviewers to chat with the system, learning through immediate feedback rather than relying solely on formal training or shadowing experienced team members.

💡Learn more: Skills and Best Practices for Using GenAI for Doc Revew

Real-world use cases

Recognizing that it gives them an edge, legal teams are beginning to slot GenAI into processes where speed, flexibility, or transparency matter most. Some examples:

Protocol prototyping

Before kicking off a full-scale review, teams can draft tag definitions and test them with GenAI. Within hours — not days — they can start seeing results that help them identify unclear logic, spot edge cases, and refine the language to better match the document population. This cuts down on wasted review cycles and improves model readiness for TAR if it follows.

Case study: DISCO Review Shines with High Accuracy and Increased Efficiencies

Short-deadline investigations

In internal investigations or regulatory matters with tight deadlines, there’s often no time to train a traditional model. Teams can use GenAI to surface potentially responsive documents, read system-generated justifications, and make faster, defensible tagging decisions without waiting on predictive scores.

Unstructured data triage

Modern datasets don’t come in tidy folders. Teams can use GenAI during their review process to do more than generate coding suggestions. They can interrogate a review database with much greater richness than by using keyword searches alone. They can summarize documents (including documents in foreign languages), ask questions, and get definitions for terms of art, persons, and entities mentioned in a document, which can jump-start the team’s understanding and cut the time required to get to high-quality results.

Post-review QC and insight generation

Even after a primary review is complete, GenAI can help legal teams make sense of the results. It can generate summaries of documents tagged with the same issue code, surface anomalies between tags and content, and support case strategy with high-level narratives built directly from reviewed material.

Learn more: How to Use Generative AI for Document Review

The defensibility debate

Defensibility has always been the North Star of ediscovery. No matter how sophisticated the tools become, legal teams must be able to explain, justify, and document how decisions were made. This is especially important when review outcomes are challenged in court.

TAR has earned its place in that conversation. Over more than a decade, predictive coding has been accepted by courts across jurisdictions, supported by statistical validation protocols and consistent documentation practices. Reviewers could point to training sets, model performance metrics, and sampling plans to demonstrate that their approach met reasonable standards of accuracy and completeness.

So where does GenAI fit?

Contrary to early skepticism, GenAI workflows can be just as auditable if the right controls are in place.

- Prompt documentation: The tag descriptions provided to the large language model (LLM) are recorded, versioned, and traceable. This creates a clear paper trail of how the system was instructed and how its behavior evolved.

- Explainable outputs: Rather than just assigning a numerical score, GenAI tools can offer plain-English justifications for each tagging suggestion. That level of transparency can help reviewers (and judges) understand the reasoning behind a decision, something black-box models rarely provide.

- Human review and accountability: At every step, a human remains in the loop. Reviewers assess, accept, or override GenAI’s suggestions, and the final tagging decisions remain under human control. This ensures that no document is coded solely on the recommendation of an LLM.

- Statistical validation: Review teams still use recall and precision metrics, measured against statistically sound sample sets, to determine how well the GenAI-assisted review is performing.

In short, defensibility in a GenAI-assisted review doesn’t come from the model alone. It comes from the same principles that made TAR acceptable to courts in the first place: a combination of transparency, traceability, and human oversight.

As more legal teams document their workflows and validate their outcomes, GenAI is steadily earning its place not just as a useful tool, but as a trustworthy one.

Want to explore what this looks like in practice?

Here are a few next steps you can take:

- Discover Auto Review: Explore how GenAI-assisted review delivers faster results, plain-language explanations, and precision that meets modern review demands. Learn more about DISCO Auto Review.

- See GenAI + TAR in action: Learn how DISCO’s hybrid approach helps legal teams move faster with confidence. Explore DISCO Ediscovery

- Meet Cecilia: Discover how GenAI tools like Cecilia Q&A and Auto Review are helping teams ask better questions, get better answers, and accelerate insight. See Cecilia AI in action

- Dive deeper: Want to go deeper into how predictive tagging, cross-matter AI, and GenAI all come together in one platform? Take a guided tour of DISCO’s AI features

.webp)

%20(1).jpeg)

.webp)